MiniCPM

| Introduction: | MiniCPM is a series of ultra-efficient large language models (LLMs) designed for high-performance deployment on end devices, achieving significant speedups and competitive performance against larger models. |

| Recorded in: | 6/9/2025 |

| Links: |

What is MiniCPM?

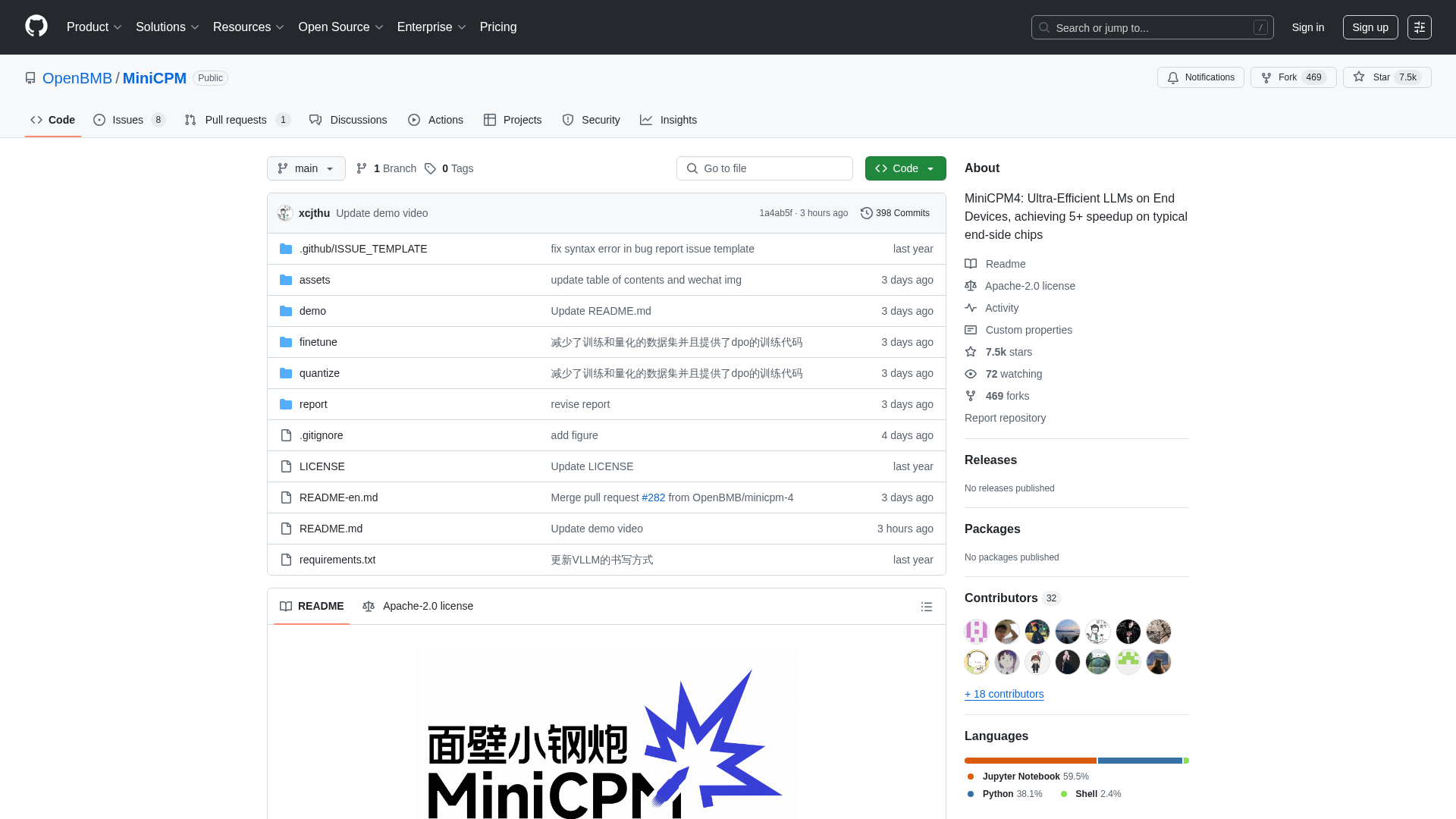

MiniCPM is an open-source project developed by OpenBMB, THUNLP, and ModelBest, offering a family of highly efficient large language models (LLMs) optimized for deployment on resource-constrained end devices. It targets developers, researchers, and organizations seeking to integrate powerful AI capabilities into edge computing environments without sacrificing performance. The platform provides various model sizes (from 0.5B to 8B parameters) and advanced features like extreme efficiency through optimized architectures, learning algorithms, and inference systems, enabling superior performance in tasks such as long-text processing, tool calling, and code interpretation.

How to use MiniCPM

Users can interact with MiniCPM by cloning its GitHub repository, which provides access to the model weights and inference code. The models are available for download on HuggingFace and ModelScope. For inference, users can leverage various optimized frameworks like CPM.cu (recommended for maximum efficiency), HuggingFace Transformers, vLLM, SGLang, llama.cpp, Ollama, fastllm, and mlx_lm. The project also offers detailed guides for model fine-tuning using tools like LLaMA-Factory and for advanced functionalities such as tool calling and code interpretation. As an open-source project under the Apache-2.0 license, there are no direct registration requirements or pricing models; users can freely download and integrate the models into their applications.

MiniCPM's core features

Ultra-Efficient LLM Architecture (InfLLM v2 for sparse attention)

Extreme Efficiency on End Devices (5x+ speedup)

Broad Model Range (0.5B to 8B parameters)

Advanced Quantization (BitCPM4 for 3-value quantization)

Long Context Window Support (up to 128K, extendable to 131K+ with RoPE scaling)

Optimized Inference Frameworks (CPM.cu, vLLM, SGLang, llama.cpp, PowerInfer)

Tool Calling Capability (MiniCPM4-MCP for interacting with 16+ MCP servers)

Code Interpreter Functionality

Automated Survey Generation (MiniCPM4-Survey agent)

High-Quality Training Data (UltraClean, UltraChat v2)

Use cases of MiniCPM

Deploying powerful AI assistants on mobile phones, smart home devices, or embedded systems.

Developing applications that require real-time, efficient processing of long documents or conversations.

Building intelligent agents capable of interacting with external tools and APIs.

Automating the generation of academic literature reviews or research summaries.

Integrating code execution and problem-solving into AI-powered development tools.

Enhancing Retrieval-Augmented Generation (RAG) systems for improved information retrieval.

Fine-tuning compact language models for specialized industry applications with limited computational resources.

Enabling offline AI capabilities in various consumer electronics and industrial equipment.

Research and development in efficient AI and large language models.

Creating custom AI solutions that require high performance on edge devices.